Hirsch's Cargo Cults Revisited

by Siegfried Engelmann

October, 2002

General

E. D. Hirsch's article, Classroom Research and Cargo Cults argues that classroom research is unreliable in identifying effective practices. Hirsch asserts that information about effective practices is more likely to be generated by laboratory studies. He refers to vocabulary development to illustrate how laboratory research leads to consensus about how best to teach vocabulary. The reference to cargo cults comes from an excellent chapter in "Surely You're Joking, Mr. Feynman!". The point of the chapter (which should be required reading for every graduate student in the social sciences) is that if science is to be science, it must be basically honest, not merely a shell composed of the trappings and strategies used in legitimate science.

The cargo cults are groups in the South Seas that put on a show of technology, building runways, illuminating them at night, wearing hats that resemble those of a radio operator. This show is supposed to cause planes to land there and bring lots of treats to the locals. Feynman points out that education has a parallel type of superstitious behavior. "There are big schools of reading methods…, but if you notice, you'll see the reading scores keep going down — or hardly going up — in spite of the fact that we continually use these same people to improve the methods. There's a witch doctor remedy that doesn't work."

That's where the irony comes in. Hirsch writes as if he is basing his critique of classroom research on Feynman's position, but actually, the things he argues for are more consistent with cargo-cult beliefs than they are with the logic and intent of science. Hirsch's premise is basically that classroom research is relatively useless (tempered with a few caveats about how it, in concert with "laboratory-based research," would be the ideal package). In making the case for "laboratory science," as being the preeminent resource for information about reforms, he refers to a study that Feynman cites. In the ’30s, P. T. Young conducted a maze experiment with rats. The goal was to determine if the rats could be taught the relational rule that the food was in the alley three places down from wherever the rat was placed in the maze. The rats persistently went to the alley in which the food had been on the previous occasion. Young tried to get rid of the clues that permitted the rats to know where they were in the alley, so they would have to rely on relational information, not information about their absolute position in the maze. He controlled for odor. That didn't work. At last, he discovered that the rats were using auditory cues. They could tell where they were by the sound the floor made. By using a sand "floor," Young eliminated the spurious cues and the rats were now forced to learn the relational rule.

For Feynman, this study was the essence of scientific intent. Feynman pointed out that subsequent studies did not control the auditory variables the way Young did. What lessons does Young's experiment generate? For the educational scientist, a primary one would be that if the "teaching presentation" is consistent with more than one possible interpretation, at least some learners will pick up on unintended interpretations and that these inappropriate interpretations must be excised from the presentation in the same way Young excised the spurious odor and auditory cues. In rat terms, if you are reinforced for going to alley four, one interpretation you might derive would be, "Go to alley four for food." If you don't know which alley you last went to or where it is, you have to find out some other basis for discovering how to obtain the food.

This rule about competing interpretations has never been taken seriously by education, which is one of the reasons it is a cargo-cult science. Many first-graders have been reinforced for guessing at words and looking at the picture to figure them out — even though these operations cannot possibly work on material that is not syntactically predictable and doesn't have pictures. These children discover that they can't really read something without pictures and discussions and some other things that they don't clearly understand. They know that if the teacher called on them to read something that had not been "memorized" or keyed to pictures, they would fail.

The remedy would be to design beginning-reading material so the child cannot predict what the words say by sentence cues, first-letter cues, or picture cues. Once these avenues of information have been eliminated, the child is in a situation parallel to that of the rat in the sand-floor maze. The child is now forced to learn about the real details that predict reading success, just as Young's rats were forced to learn the relational rule.

Hirsch does not pursue this direction, or some of the others that are fairly clearly implied by what Feynman discusses. Instead he cites studies in which student performance was not reliably predicted by class size. Hirsch concludes, "The process of generalizing directly from classroom research is inherently unreliable." In Hirsch's defense, he does point out that the studies on class size do not consider possible interaction of class size with other variables; but he still draws the highly speculative conclusion, "The limitations of classroom research eliminate not only certainty, but also the very possibility of scientific consensus — a very serious problem indeed."

Of course, consensus in a cargo-cult science like education cannot be used as a legitimate

measure of anything, certainly not as a guide to what is true and what

is superstition. Polling the members of the cargo cult on when they suppose

a plane will land and what their rationale or theory is would not be a

reliable guide to finding out what makes the planes land. In the case

of the Young study, consensus called for ignoring the outcomes of the

experiment, just as consensus in education has fostered textbooks and

beginning-reading practices that have created poor readers by promoting

guessing, using context cues and picture cues to identify words.

Problems of Analysis

Hirsch's further articulation of the classroom-research issues is a tapestry of false dilemmas. He argues that if identifying the contribution of a single "factor" like class size to student performance cannot be reliably analyzed through classroom research, imagine the difficulty of trying to evaluate whole-school implementations.

"If [various] classroom factors had been experimentally controlled at the same time, then it would be extremely hard if not impossible to determine . . . just which of the experimental interventions caused or failed to cause which improvements. And if a whole host of factors are simultaneously evaluated in "whole-school reform," it's not just difficult, but . . . impossible to determine relative causality with confidence." (page 4)

Yes, if all of the variables are controlled, it would be not only impossible but extremely unenlightened to try to determine the effect of any one variable. But concern with one factor or variable represents a false dilemma. The point of the whole-school reform is to produce overall acceleration of student performance. The reform is not attempting to address questions of undisclosed "factors." The researchable question is, simply, What works well? Testing a whole-school reform model is a lot like testing cars. If one model outperforms the others the primary question has been answered. This model is the winner. If the goal is to find out why it is the winner, a completely different approach would be implied — a type that has never been done but that is implied by the Young experiment. The winning whole-school program would be completely implemented according to all the rules. Then the various provisions of the program would be modified singly, while trying to keep the others in place. If the program calls for particular correction procedures, they would be modified, but everything else would remain the same. The result would be an improvement in student performance, the same performance, or lower performance. In any case, the relative contribution of the "factor" that had been manipulated could be rather precisely identified, and its interaction with other variables would be at least partially established because the manipulation of the variable takes place within the context of the other variables.

But it does not follow that because whole-school implementations involve many factors, they should be evaluated in the laboratory. Quite the opposite. They must be evaluated in the classroom because there, and only there, is where they are designed to perform. Consider an automotive-design parallel. Would there necessarily be agreement among engineers about what arrangement of suspension, engine, and drive train would work best for a given chassis, or why? Probably all would be able to refer to "factors" or combinations of "factors" that supported their view. The reason that engineering of this sort is not a cargo cult is that the investigators don't stop there. They build the cars and test them, not in the laboratory or a component at a time, but in real-life driving situations (or reliable simulations). Engineers recognize that performance is the final arbitrator about what works and about which inferences were right in this application. Engineers adjust their theories on the basis of the performance outcomes, not vice versa.

The Gordian-knot solution may be the simplest way to deal with Hirsch's suggestion that classroom

research is unreliable and does not generate implications about causality.

Consider the following figures:

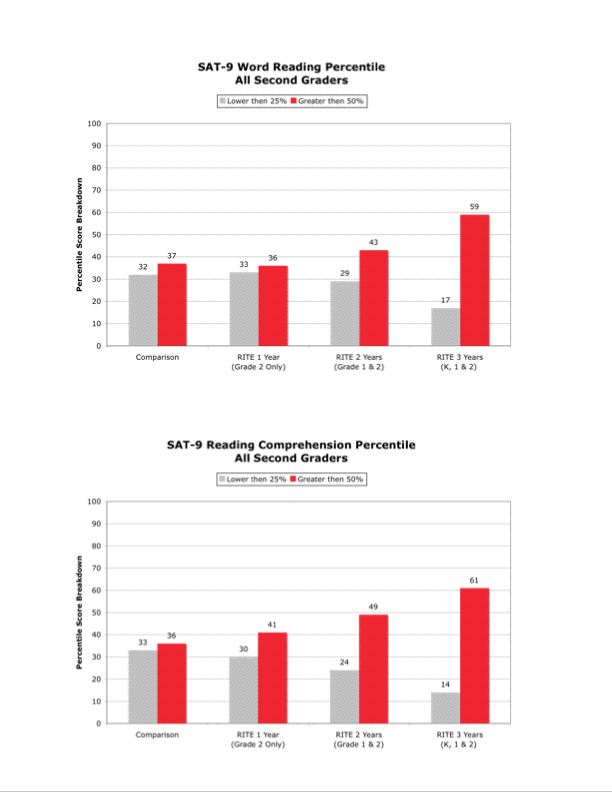

The two graphs show outcomes of students who went through an intervention referred to as the RITE Program. The figures show three main things:

- The RITE second-grade

students who have been in the intervention for only one year perform

about the same as the students in the comparison program, with almost

as many students performing below the 25th percentile as those above

the 50th percentile.

- The RITE students

who have been in the program for two years show some advantage over

the comparison group in both word reading and comprehension and perform

close to the level of "average students."

- The RITE students who have been in the program for three years show a great advantage over the comparison group in both word reading and comprehension, with about 60 percent of the students performing above the 50th percentile and fewer than 20% of the students performing below the 25th percentile.

In summary, the RITE program apparently creates a cumulative effect for what seems to be the same population as the comparison students, with greater improvement a function of the number of years students have been in the program.

The measure that is used (Stanford Achievement Test, 9) seems to pass tests of "face validity," which means that it actually tests something about word reading and reading comprehension. Also, the gains are not niggling. The difference between the comparison group and the RITE 3-year students is over a standard deviation. But could these results be generalized?

Without knowing more, we couldn't say with confidence. If there were only fifty or sixty students in the whole study, we could be skeptical about whether the same kind of trends and differences would occur if the study were repeated. Possibly a handful of exceptional teachers, rather than the RITE program, accounted for the difference.

Let's say there were 4000 students in the RITE group and the same number in the comparison group. Let's say that the comparison group used a "research based, phonics reading program." Let's say that the boy-girl ratios and other demographic details were the same for comparison and experimental groups. Finally, let's say that the study was conducted by an external evaluator (reducing the possibility of "bias").

All the "let's says" are real. RITE stands for the Rodeo Institute for Teacher Excellence (Houston). The evaluator was the Texas Institute for Measurement, Evaluation, and Statistics. The report was published in 2002.

Because the RITE program had to be replicated in many classrooms with many teachers, over three years, we could now say with a great deal of confidence that the results would occur the same way if the program were implemented the same way it was in the study. With the crutch of statistics, we are able to express our confidence with numbers. If this outcome occurred by chance, this particular pattern would not occur more than once every 10,000 times the experiment was repeated (P< .0001). Therefore it did not occur by chance. It was a function of a reliable and predictable design. There is very little margin for arguing either that the program was not effective, or that the results are not generalizable.

The evaluation does not have to answer questions about any "factor," or processes for inducing "vocabulary development" or "phonemic awareness." The evaluation does not even have to answer the question about whether the RITE Institute used a "phonics based program." The program, through whatever combination of practices and rationale, works better than the comparison program. A school interested in achieving the kind of gains RITE achieved with lower-performing students is provided with very specific information about how to do it.

This situation is parallel to that of someone who wants to buy a high-performance automobile. If the tests are well done, they provide the potential buyer with precise information about which models perform best.

Hirsch seems to argue that results of this type are not even possible.

". . . The process of schooling is exceedingly context-dependent. Children's learning is deeply social, lending each classroom context a different dynamic. Moreover, learning is critically dependent on students' relevant prior knowledge. Neither of these contextual variables, the social and the cognitive, can be experimentally controlled in real-world classroom settings."

Thus, Hirsch has apparently determined through ratiocination either that the RITE study could not have occurred as reported, or that the statistical estimates are baloney and that the outcome probably would not occur this way again because of social, cognitive, and contextual variables that affect performance.

In fact, the study tends to show the irrelevance of these variables. This is not to deny their existence, but to deny that they can be legitimately used as explanatory devices for what works or why it works. Consider that the same underlying dynamics, (prior learning and social variables) affect the comparison students as well as the experimental students. If one model achieves great gains over the other, whatever possible negative effect these variables may have has been countered by the effects of the successful intervention.

Hirsch's ultimate conclusion about data is, "One major assumption of educational research needs to be examined and modified — i.e., the assumption that data about what works in schools could be gathered from schools and then applied directly to improve schools." This proclamation seems to mean that if teachers were trained exactly as they were in the RITE study and performed according to the same criteria and schedules with the same student population, the results would not be the same. That seems to be a hypothesis for a study, but the odds seem to be greatly against Hirsch. It would be a lot like observing automobile comparisons of two premier cars in which A thrashes B on say fifty consecutive runs and then contending that on the next run under the same conditions, the results would be different. That assertion implies a test, but not one that would generate very favorable odds.

After concluding that classroom research is unreliable, Hirsch presents a potpourri of ideas and observations that are somehow supposed to establish a case for laboratory research and "theories" of cognitive science, whatever these may be. The field of "cognitive science" would seem to cover a broad and unholy spectrum, from studies that deal with quasi-neurological data like "channel capacity," and "chunking" to those that propose models of attention and performance, to those that articulate some of the variables in effective instructional sequences and interventions. Hirsch asserts that this group has arrived at consensus on what Hirsch believes are important strategic matters that have implication for effective practices.

The fact that there is consensus on underlying theoretical issues is not relevant to the empirical question "What works better?". Again, this is not to say that some of the observations Hirsch makes are "wrong"; rather, they are misdirected and don't serve as evidence for the conclusions Hirsch tries to draw. If no planes land, no planes land, which means the theory or observations did not generate successful applications.

At the heart of Hirsch's position is the strange relationship he describes between vocabulary acquisition and the acquisition of other skills. According to Hirsch, explicit instruction is proven for beginning reading and for other tasks, like identifying the sex of day-old chicks. He observes that learning a complex skill or discrimination is greatly facilitated if it is broken down into articulate parts that are presented through explicit instruction (just as it was done in the Young experiment). Interestingly, he refers to the practices for teaching beginning reading that derived not from "laboratory studies," but from classroom studies. The preoccupation with "phonemic awareness" derived from observations that specific successful instructional sequences had phonemic-awareness exercises.

Although the explicit approach is appropriate in all these applications, there is an exception, according to Hirsch, which is vocabulary acquisition.

- ". . . word

meanings are acquired gradually over time through multiple

exposures to whole systems of related words." - Vocabulary acquisition takes a long time (unlike other skills).

- Young children learn slowly…

Hirsch further contends that the cognitive scientists provide "well tested advice on how to teach vocabulary."

"[Teachers and administrators] would find a consensus that, depending on the prior knowledge of students, both isolated and contextual methods need to be used-isolated instruction for certain high-frequency words students may not know or may not recognize by sight, like the prepositions "about," "under," "before," "behind," but carefully guided contextual instruction for other words. Teachers and administrators would learn that word meanings are acquired gradually over time through multiple exposures to whole systems of related words, and that the most effective type of contextual word study is an extended exposure to coherent subject matters."

The first problem with this formula is the amphibology involving the notion that word meanings are acquired gradually. The whole of vocabulary development may continue over a long period of time; however, this fact does not imply that the learning of specific parts takes a long time (although it may take a long time under some conditions). Hirsch's argument is a lot like arguing that the achievement of "math development" to calculus takes a long time; therefore, all but the most elementary parts that compose this whole take a long time, and are therefore best taught implicitly.

A more cogent argument based on the uniform superiority of explicit practices over indirect, discovery, or implicit techniques is that for any given set of words, related or unrelated, explicit teaching will outperform any form of implicit teaching. It's not clear exactly what Hirsch means by "contextual instruction," but it is clear that he's got it wrong. If a child does not know the word before, effective teaching would involve a number of examples that clearly show both the meaning context and the syntactical context for the word. Clear communication can't be achieved by saying, for instance, "Before means prior." The child doesn't understand prior or any of the other synonyms for temporally before. In contrast, many obscure words are readily taught by using the direct formula of providing a "synonym" or verbal description. For instance: If something is conspicuous, it is very noticeable; the ulna is the bone in the forearm that is closest to the little finger."

Hirsch parenthetically presents a caveat that tarnishes his "well tested advice."

"Cognitive scientists have reached agreement, for example, about the chief ways in which vocabulary is acquired. This theory gained consensus because it explains data from many kinds of studies and a diversity of sources. While incomplete in causal detail, it explains more of what we know about vocabulary acquisition than does any other theory."

The observation that it is "incomplete in causal detail" means that they do not have the bottom-line data about what works. They have never caused serious vocabulary development in lower-performing students. Without the outcome data that was clearly caused by an intervention, there can be no "well tested advice," only some degree of a cargo-cult science.

Hirsch further observes:

". . . This scientific consensus arose not just from classroom educational research but principally from laboratory studies and theoretical considerations unconnected to the classroom. . . . For instance, . . . a top-of-the-class 17-year-old high-school graduate knows around 60,000 different words. That averages out to a learning rate of 11 new words a day from age two. Although this estimate varies in the literature from 8 to 18, its range implies by any reckoning a word-acquisition rate that cannot be achieved by studying words in isolation. There is notable cognitive research on the subject of vocabulary acquisition. Synthesis of this research is a more dependable guide to education policy than the data derived from classrooms."

Hirsch uses "developmental" data as a basis for drawing conclusions about the design of instruction. This strategy has never worked, probably because it is logically impossible. The norms for vocabulary tell only what the typical top-of-class student learned in a setting that presents undisclosed "teaching." Therefore, they do not have implications for what could be done through concerted instruction with a classroom of at-risk second-graders. The quest to derive effective instruction from Piagetian developmental norms failed completely.

Vocabulary

Interestingly, Piaget used some of the same descriptions about cognitive operational knowledge that Hirsch uses to describe vocabulary. Piaget said that learning takes a long time (is subsumed by development), requires concrete manipulation (the beans that Hirsch refers to in one of his examples), and requires observation of process as well as outcome. In an experiment (which has been ignored in the same way that Young's work was) kindergarten-age, at-risk children were taught formal operational logic through procedures that showed no processes or transformations, involved no manipulation of concrete objects, presented no real objects (only simple two-dimensional representations) and required a total teaching time of less than three hours. These children passed tests involving specific gravity (which is assumed to require a formal-operational developmental underpinning not observed in children under about 12 years old). The details of this teaching were not implied by any "developmental data," but by the logic of the content that was to be taught.

Hirsch expresses concern with the generalizability of classroom data; however, the generalizability of data on a top-of-class 17-year-old is prima facie suspect. Possibly less than 5% of the student population is top of class, and the student that Hirsch describes is clearly not top-of-class in inner-city schools. It is hard to see how conclusions about this top-of-class student even imply details about the training program she received. So exactly how could it have instructional implications for lower-performing second-graders? Even if we wanted all students to acquire the same vocabulary that the top-of-class student has, which seems fanciful, we wouldn't know whether it was possible within the parameters of the school day, even if we taught nothing but vocabulary-building all day long.

A far more parsimonious and relevant basis for designing instruction would be to identify what vocabulary and "coping skills" students need to perform in particular academic areas, including the area of reading unspecified texts that have vocabulary and syntactical structures students may not have learned.

The top-of-class 17-year-old

is irrelevant to this pursuit because her learning is not even predicted

from the school program she received. A lot of other students in her class

received this program and are not top-of-class students. Therefore, whatever

sets her apart from the "average" student is a function of something

other than school instruction. Possibly it is genes, in which case there

isn't much we can do it about it. Possibly her performance is a function

of out-of-school influences that prepared her with vocabulary, provided

reinforcement for her efforts, and sometimes answered her questions-the

kind of help the top-of-class student in East St. Louis, for instance,

often does not have. Possibly it's a combination of genes and home influence.

In any case, if our domain of influencing change is the school, it doesn't

seem wise to argue from a model that is not an attainable product of a

school program.

Hirsch's appeal to consensus about how to teach word meanings has four

other problems:

- The numbers that he presents on vocabulary acquisition are suspect;

- The criterion of having "learned" these word "meanings" is fuzzy;

- His argument for the use of context for teaching words is a false dilemma; and

- His conclusion that the data on numbers imply implicit instruction of some sort does not follow from his premises.

The numbers:

Hirsch selects 60,000 as the number of word meanings for the top-of-class student. I did a very unscientific experiment that may be way out in left field, but I came up with a smaller number. I didn't have a top-of-class high-school student handy, but I had a top-of-class graduate student. I opened a college dictionary that had about 70,000 entries to four random pages. I read the words, spelled them, told her the part of speech for those she questioned, and asked her if she knew what they meant. On three of the pages, she did not know all the words. On one page, she did not know bourn, bourrée, bouse, boustrophedon, bouzouki, bowerbird, bow pen, bowsprit. She also didn't know a second meaning of bower (a bow anchor). She probably didn't know bovid, but I gave her half credit. "Could that be something related to a bovine?" "It is an adjective for bovine."

Also, I did not present most capitalized entries because I didn't think they were fair (Bournemouth, Bow bells, Bowditch, Bowen, Bowie State.) I did present Bowie and Bowling Green. I did not present six entries because they were either dialect, slight variations of the same word (two bowman entries for instance), obsolete, or spelling variations (bowlder for boulder). The page had 58 entries. Eleven were discards. Of the 47 remaining, she missed 9.5 (half credit for bovid). So her score on that page was 37.5/47 or 80%. Her performance on the other pages was 100%, 65%, 39%. The low-scoring page had lots of sodium words, which she could identify only as a substance composed of sodium. (She got sodium chloride, sodium fluoride, sodium glutamate, and Sodium Pentothal, but she was not able to identify the others.) Also, I threw out a lot of items on this page-variant spellings, obsolete words, capitalized words I didn't know and that seemed trivial, affixes, and obscure slang words. She also missed sociometry, socal, socman, sokeman, sodalite.

Indeed my decisions were less than operationally delineated, but if we assume that 15% of the entries are not fair and that the top-of-class person would get average 80% on the others, the total number would be something on the order of 48,000, which is quite a bit less than 60,000. Personally, I don't believe it's that high. Also of interest is that a very extensive analysis of morphology for spelling, conducted in the '70s, came up with a number of 30,000 words that seemed to be fairly exhaustive.

At least some cognitive scientists favor this range over the one that Hirsch suggests. Biemiller and Slonim (2001) concluded that the learning rate of new words for the top-of-class student is more on the order of about 3 words per day, not 8-18. So there seems to be far from perfect consensus on number of words. Also, Biemiller endorses explicit, direct instruction. So there isn't perfect consensus on methods for inducing vocabulary.

The criterion for learning:

Some of the studies that generate the data about which word meanings have been learned are wanting. The typical test is a reading test in which some determination is made about whether the students understood what they read. But the test isn't a precise measure of the student's receptive understanding of the word (let alone of the ability to use the word expressively).

\Another problem with the number of words generated by some studies is that the criterion may not be based on words, or entries in the dictionary, but on number of "meanings" words are judged to have (with one word generating more than one meaning). If my judgments on whether my subject knew a word were arbitrary, so are those about what constitutes a different meaning. Does bovine have two meanings because we can refer to a bovine (noun) and a member of the bovine family (adjective)? If it is counted as two, it is questionable because anybody who knew the noun and knew much about the language, would have no trouble with the adjectival transformation.

In any case, there is at least some doubt about the accuracy of the numbers, the preferred methods for inducing vocabulary, and the criteria for whether word meanings are learned. These doubts are adumbrated by the lack of implications that consensus on acquisition have for teaching. You find out the number that can be taught to the targeted population by experimenting with different approaches. These would make excellent classroom studies.

Conclusions about teaching words in context:

Hirsch declares, "Implicit rather than explicit learning is . . . the superior method for vocabulary growth, since word acquisition occurs over a very long period, and advances very, very gradually along a broad front." Aside from the fact that learning 11 words a day would not qualify as "very, very gradual learning, Hirsch seems to refer to implicit versus explicit instruction as if this difference is related to teaching words in isolation versus teaching words within some kind of context. The two issues are only obliquely related, but if words are taught explicitly through any kind of effective instruction, they will be presented not only in isolation but in sentences. The reason is that it is not possible to teach meaning-usage of many words without the sentence context. Even for nouns, the context shows restrictions that are not always evident from the "definition." For words like refer, the context information is essential. The American Heritage dictionary on my desk provides only the following definitions for the verb intransitive meaning: to make reference or allusion; to apply, as for information or reference.

It would be impossible to use this word as it is conventionally used without a lot more information. You don't say the sentence, "I referred," or "I referred with the first page of the book," or "I referred for the book." All of these sentences, however, meet the dictionary definition. In addition to being restricted by the word context of refer to X, the word is also restricted to a narrow range of meaning contexts, particularly with respect to identifying the agent that does the referring. It is probably poetic but not common usage to say something like, "My eyes referred to her figure," even though this sentence would be within the parameters described by the word context. Therefore, any teaching that makes sense would have to present a fair number of examples of the word in sentence (or phrase) contexts to show both the meaning context and the word context.

The dictionary sometimes presents phrases that are not very enlightening even for unknown nouns. For the word cynosure, the definition is: (noun) Something that strongly attracts attention by its brilliance, interest, etc.: the cynosure of all eyes. (By the way, this same definition occurs in just about all dictionaries.)

The dictionary gives the part of speech, tells what the word means, and gives a phrase that uses the word. Yet, it may be hard for somebody to use this information to generate sentences that incorporate the word. If you're not familiar with the word, try it. Is the word always followed by an "of phrase" or prepositional phrase?

If the new word is equated with a known meaning of a phrase, usage is easier to demonstrate. 'A cynosure is an attention magnet. Listen: They saw a mountain that was an attention magnet. Say it another way: (They saw a mountain that was a cynosure.) He told us that his cousin was an attention magnet. Say it another way . . . '

Conclusions about implicit instruction:

Hirsch concludes that the number of words that a child would have to learn per day (11) to reach the 60,000 implies that the only sensible vocabulary-building program is one that promotes some sort of amorphous accretion of knowledge, not the acquisition of measurable units of knowledge. This argument is logically an argument from ignorance. It says, in effect: We don't know how to do it; the cognitive scientists have never done it in the laboratory; it has been observed to occur in uncontrolled and undocumented settings; therefore it is best achieved through implicit instruction. This kind of logic is frowned on by legitimate sciences.

Feynman pointed out

that we must be honest and indicate both the extent to which we know something

and the extent to which we don't. The appropriate conclusion would be:

We need demonstrations of what can be done in the classroom so we can

analyze them to see why they work and the extent to which we can improve

on them (with improvement measured by differences in results).

Principles of Learning

Hirsch presents

seven 'consensus principles of cognitive science' that "would almost

certainly enhance classroom learning, and ought also to encourage a shift

in the way policymakers use educational data and research." The main

problem with most of these principles is that they are not principles

of teaching; however, the only thing the teachers will do is teach, and

the only variables that are under our control are those that relate to

teaching. Teachers can control the examples, the wording, the manner in

which they test acquisition of knowledge, and so forth. If the interactions

are not expressed in terms of variables that are under the control of

the teacher or the source of information, however, there is no link between

"teaching" and the observed learning.

All Hirsch's principles present problems (especially those that are illustrated

with specific examples of instruction). Two present problems of scientific

orientation.

Hirsch's Principle of Learning #1:

Prior knowledge as a prerequisite to effective learning. If this principle were restated so that it referred to teaching, it would make sense, but only in a global way. Current knowledge is a strict logical limiter of what can be taught. If a task cannot be performed successfully without understanding certain words in the directions, the task cannot be taught until the understanding of the words is taught. For instance, "When I clap, nictitate half a dozen times." The correct response is impossible without knowledge of nictitate and half a dozen. When the knowledge is present in the learner's current repertoire, the limiters have been removed and the learner would be able to perform on the task. Also, any teaching that assumes an understanding of these expressions would be possible.

Hirsch's Principle of Learning #4:

Attention determines learning. This is Hirsch's most contentious and objectionable principle. He observes "that we should not be overly distracted by the vast and unreliable literature on what will or will not properly motivate students-a debate that seems baffling to many teachers, since what motivates some students does not motivate others." The teacher is supposed to use "whatever methods may come to hand, including, above all, giving students the preparatory knowledge that will make attention meaningful."

This series of observations is like a badly coiled rope. In the first place, an extensive literature on reinforcement shows that children are quite lawful with respect to pursuing that which leads to positive reinforcement and avoiding that which leads to punishment. Reinforcement is defined functionally as a consequence of behavior that increases the probability of the child behaving in a particular way. Reinforcement is operationally what the teacher must do to "motivate" the children. It expresses the "teaching" variables that are to be manipulated, not the internal "motivation" that is to be learned or influenced.

In the second place, teachers who are schooled in the appropriate use of praise, corrections, pacing the presentation, and other details that increase the probability of the child receiving positive reinforcement, have children who attend better to what is presented and who perform better. These techniques, however, are ones that the typical teacher does not use effectively without specific training.

The contention that

what motivates some students doesn't motivate others is contradicted by

the performance of knowledgeable teachers' students. During instructional

periods in which students have a high probability of answering items correctly,

students respond at a high rate, and the teacher praises children who

perform well, incidences of misbehavior are much lower than at other times

of the school day. To assume, however, that teachers who have never taught

low-performers effectively are good judges either of what motivates children

or how to motivate them is cargo-cult logic.

Scientific answers to questions about teachers' skills come from the same

arena that generates answers about the skills and performance of children

— the classroom. It is certainly politically correct to say that

teachers are astute judges of children's needs. Unfortunately, this declaration

is not substantiated by what is observed in the classroom. Most schools

in an inner-city are failed, which means that for whatever reasons, the

teachers failed. Either this failure is inevitable with respect to what

the school is able to do or it isn't. If it isn't, the teachers will have

to learn to do things quite differently from what they do now. They will

have to learn new skills and apply them in a way that causes accelerated

performance of their students. Once they are good at doing this, their

opinions about motivation are valuable.

A related problem is Hirsch's practice of relying on what teachers report as if it were an accurate description of what goes on in the classroom. A particular account may be accurate, but the practice is speculative. Various studies in which observations were compared with what teachers and administrators indicated went on in the classroom disclosed practices and interactions quite different from those described. The conclusion of several studies was that the verbal behavior changed a lot, but what occurred in the classroom hadn't. In one study, fourth- and fifth-grade teachers were interviewed about how closely they followed the directions in the teacher's manual for teaching skills like main idea and cause and effect. Most teachers indicated that they used the directions as something of a jumping off place for doing their own thing, using "whatever methods may come to hand." Classroom observations yielded quite a different relationship, with teachers following the program very closely.

The point is not that

teachers are unreliable reporters, but that if we don't know how reliable

the reports of a particular teacher are, it's not wise to assume that

the report is accurate, particularly because what a teacher reports is

limited by what the teacher knows. We have encountered many kindergarten

and first-grade teachers who falsely insist that their children are reading.

Observations of the classroom, however, show that the children are simply

reciting material that is memorized or lavishly prompted by pictures.

The teachers' definition of reading prompts them to give reports that

are unreliable.

Perspective

Hirsch's case is very weak. He has an underlying message that makes sense, which is that students must learn a base of knowledge if they are to perform on a range of unanticipated tasks. The reason I have taken issue with the details of his orientation, however, is that they are not scientific. They lack respect for the importance of bottom-line classroom data, which show what works and what doesn't.

By basing all our

rules and decisions on what actually goes on the classroom, we avoid tarnishing

our understanding and relying on questionable theories. And that's the

whole point that Feynman tried to make in his chapter: The goal of scientific

investigation is to be honest and not to be influenced by what we would

like to believe, what people have said we should believe, what is politically

correct to believe, and above all what seems believable. To yield to these

influences is to buy a ticket to the cargo cults.

References

Biemiller, A. & Slonim, N. (2001). Estimating root word vocabulary growth in normative and advantaged populations: Evidence for a common sequence of vocabulary acquisition. Journal of Educational Psychology, 93 (3), 498–520.

Engelmann, S. (1971). Does the Piagetian approach imply instruction? In D. R. Green, M. P. Ford, & G. B. Flamer (Eds.), Measurement and Piaget. (pp.118-126). Carmel, CA: California Test Bureau.

Feynman, R. P., as told to R. Leighton. Edited by E. Hutchings (1985). "Surely You're Joking, Mr. Feynman!": Adventures of a curious character. (pp. 338-346, 288-302). New York: W.W. Norton & Company.

Hirsch, Jr., E. D. (2002). Classroom research and cargo cults. Policy Review, No. 115. Palo Alto, CA: Hoover Institution, Stanford University.

Hirsch, Jr., E. D. (1992). What your third grader needs to know: Fundamentals of a good third-grade education. New York: Dell Publishing.

Data on the RITE Program appear in: Carlson, C. D., Francis, D. J., Tatif, L., Priebe, S., & Ferguson, C. (2001-2002). RITE program external evaluation 2001-2002. Houston, TX: The Texas Institute for Measurement, Evaluation, and Statistics.